This page exists to communicate the design behind the minimal prototype of OEP-37: Test Dev Data.

| Table of Contents |

|---|

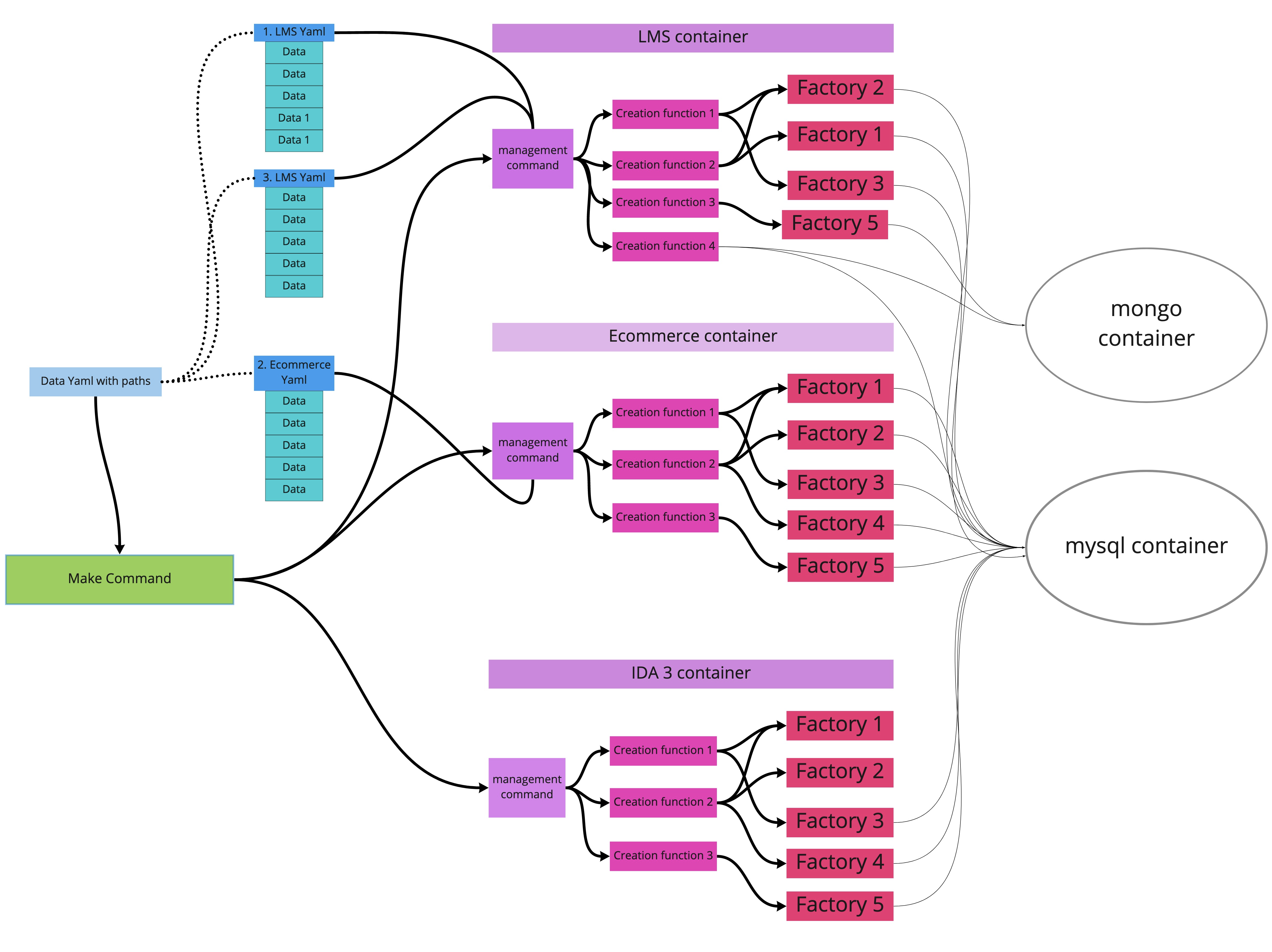

Visualization of whole system

| Expand | ||

|---|---|---|

| ||

Prototype implementation

You can find implementation details in this expand. PR links also included in expand.

| Expand | |||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Make command

Python script called by make command

Yaml filesTop level Yaml file

LMS Yaml file

LMS Management Command

Sample Factory

Ecommerce PR https://github.com/edx/ecommerce/pull/3360 edx-platform PR https://github.com/edx/edx-platform/pull/27043 Devstack PR |

How to use implementation:

Checkout all the branches

edx-platform: msingh/oep37/mvp/userenrollments

ecommerce: diana/test-data-prototype

devstack: msingh/oep37/mvp/interface

start a virtual env and run

make requirementsin devstack reporun

make dev.load_data data_spec_top_path=test_data/data_spec_top.yamlfrom devstack repoyou should now have new data in your database

if something went wrong, here are individual commands to run in each of the container’s shells

Design Decisions

Data will be specified in multiple Yaml files

A top level yaml file will list other yaml files

The order by which the data is built in specific IDA will be specified in the top level yaml file

The data contained within the yaml files will be as minimal as possible

foreign keys will be linked via some unique identifier for lookup (i.e. course_key, domain, username)

For each use of the

load_test_datamanagement command, there will be a separate yaml file with data specified.The management command will read the specified yaml file and pass on the data specification to the appropriate data generation function.

We will be reusing existing factories used for unit tests

Benefits of reuse

Decrease in code duplication

There are ton of factories, so we’d be able to support ton of data creation very quickly

Downsides

There are ton of factories which were designed for different use cases. Due to the complex nature of some of these factories, it might be hard determine side effects on the database.

Open Design Decisions

Where do all the yamls live?

Should a creation function create everything necessary for a given datum? I think, yes. If a particular datum needs other things to set up, it should create it. This would align with how factory boy works

The concern here is the sort of ‘circular dependencies’ in data (i.e. lms creates courses, then ecommerce creates seats/modes in lms, then users can enroll as verified.)

How do we shorten the dev cycle for this and to make it repeatable?

Option 1: Take snapshot of provisioned database and make it easier for people to go back to that state

We should do this for first pilot with aim of eventually enabling option 2.

Option 2: Assume this implementation method has replaced most of provisioning. Create ability to return to an empty database(that still has the data scheme, but none of the data).

How do we want to pilot this?

Likely Option: request one team works with us extensively on this. Capture their use case

Recommended decision: If we need to create data from outside of a factory, the code to create data should live in its own creation function.

When the make command is used to create data, it calls a python script which calls the management command in each of the specified service’s container. This requires the user to have pyyaml installed. Should we make it a given that devstack commands should be run from a virtualenv. According to a very quick poll by Tim in devstack-questions, very few people use venvs in devstack.

These scripts will not be idempotent. Modifications will be made to the database each time.

Do we want to limit the changes that these scripts make or do we want to allow them to make a full set of new entries each time?

How exactly do we version the creation functions?

Names?

OEP name: maybe from Test Data to Local Data

management command: from load_data to load_dev_data

yaml file keys: from ida_name to ida, from data_spec_path to path

--data-file-path to --path

Name of the whole framework

What do we want to call this method of data loading to differentiate it from others in documentation?

How opinionated do we want to be about what information you can specify about a particular datum? Example: for User model, should we limit it to unique fields: username, email? Or should developers be able to specify whatever they want(max flexibility)? The current implementation goes for max flexibility. But I imagine this might make it harder to do versioning later.

Possible Future Roadmap

Different stages

Prototype for ARCH-BOM [in-progress]

Develop MVP

tasks to do

Finalize and fully implement make interface

load_data management command in each of necessary IDA's

Add tests for the management command and data generation functions

minimum creation functions in each of the management commands

Documentation

Walkthrough of how to extend system yourself

Walkthrough of how to use system to create custom local data

Overarching system design

Recruit another Squad to work with us on this

Goal: have MVP ready to be used by another squad

User Testing with another Squad

Tasks to do

Showcase this method to external Squad

This could be a synchronous meeting or an email/document

[maybe] Ideate with them as to how they would use this method

Have person be on stand-by to answer any questions by Squad and to handle any roadblocks

Continue improving both implementation and documentation based on feedback

Goal: Have method ready to be spread to whole org

Advertise this tool to org

Tasks to be do

Further mature implementation and Documentation

Email, Slack post, eng-all-hands presentation

Have person on-call be ready to continue advertising and answering questions about this method

Replace most of provisioning with this method of loading data

Requirements

TBD

...